Algorithmic Justice League audits the auditors (and why it matters from a privacy perspective)

Whether you're thinking about it from a research, technology, policy, or strategy perspective, it's well worth the time to delve into this paper.

"Algorithmic audits (or `AI audits') are an increasingly popular mechanism for algorithmic accountability; however, they remain poorly defined. Without a clear understanding of audit practices, let alone widely used standards or regulatory guidance, claims that an AI product or system has been audited, whether by first-, second-, or third-party auditors, are difficult to verify and may potentially exacerbate, rather than mitigate, bias and harm."

- From Algorithmic Justice League's Who audits the auditors? site

Yeah really. Investigating algorithms and the systems built around them for bias and accuracy is vital both from an algorithmic justice perspective and from a privacy perspective – as we see with the American Data Privacy and Protection Act (ADPPA), where legislators and advocacy have all highlighted the importance of the requirements that "large data holders" conduct algorithmic impact assessments. But as EPIC Privacy warns in their ADPPA testimony, there's a risk that these can simply become "box-checking exercises", in the same way that privacy laws have turned into privacy theater. How to avoid that?

Who Audits the Auditors? Recommendations from a field scan of the algorithmic auditing ecosystem, by Sasha Costanza-Chock, Inioluwa Deborah Raji, and Joy Buolamwini of the Algorithmic Justice League (AJL), highlights some key considerations. Based on interviews with industry leaders and a survey, they identify best practices and include six valuable policy recommendations.

- Require the owners and operators of AI systems to engage in independent algorithmic audits against clearly defined standards

- Notify individuals when they are subject to algorithmic decision-making systems

- Mandate disclosure of key components of audit findings for peer review

- Consider real-world harm in the audit process, including through standardized harm incident reporting and response mechanisms

- Directly involve the stakeholders most likely to be harmed by AI systems

in the algorithmic audit process - Formalize evaluation and, potentially, accreditation of algorithmic auditors.

This paper was just presented at the ACM Conference on Fairness, Accountability, and Transparency (FAccT ’22) – but the audience for it is a lot broader than just AI researchers, practictioners, and auditors. For one thing, it's information that legislators and regulators looking at privacy or algorithmic justice really need to take into account.

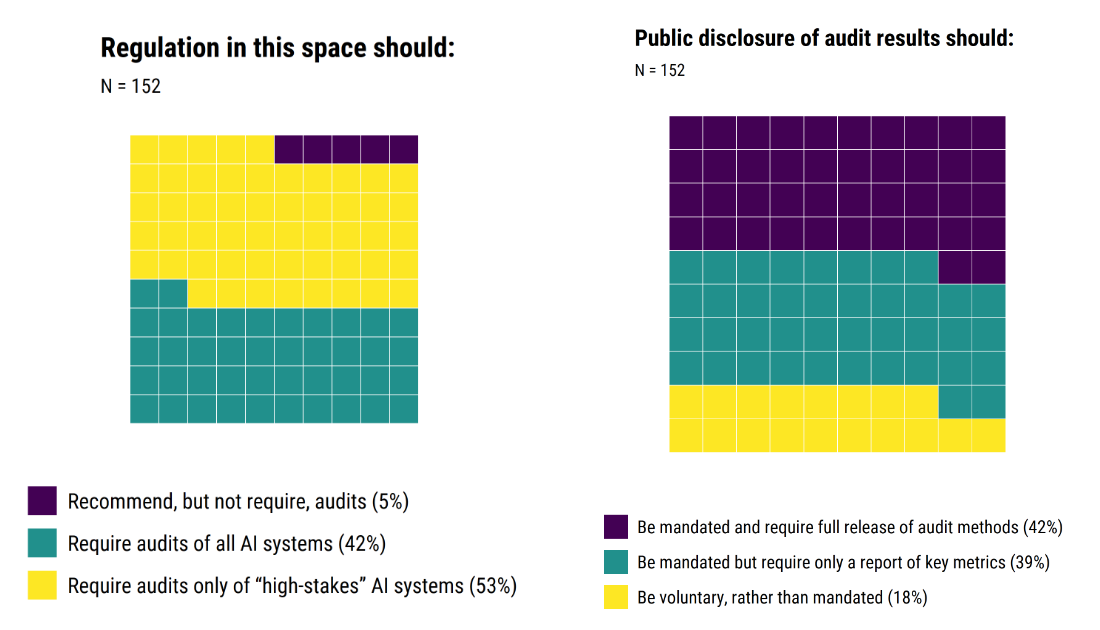

"There is a consensus among practitioners that current regulation is lacking, as well as agreement about some areas that require mandates. Auditors and non-auditors alike overwhelmingly agree that AI audits should be mandated (95%), and that the results of these audits, in part or in full, should be disclosed (82%)"

Laws and regulations are increasingly requiring auditing, at least for some systems. But the devil is in the details: will the laws regulations require meaningful audits, or will they just be a checkbox exercise? Who audits the auditors? is a great lens for improving proposals currently in the pipelne.

Returning to the ADPPA as an example: while the language in the current draft of the bill does require audits in many cases, the details fall short of these recommendations in several ways. For the bill to be effective at protecting civil rights, requirements like disclosure of audit results and involving the stakeholders most impacted by systems will need to be added.

Who audits the auditors? is also like gold from a corporate strategy perspective for companies who see responsible AI as a competitive business advantage as well as algorithmic auditing startups. Strategically, one way to think of work like this is as spotlighting places where "best practices" are not yet widely adopted – leaving a lot opportunities for significant competitive advantages. For example:

"[A]lthough many auditors consider analysis of real-world harm (65%) and inclusion of stakeholders who may be directly harmed (41%) to be important in theory, they rarely put this into practice."

So, whether you're thinking about it from a research, technology, policy, or strategy perspective, it's well worth the time to delve into Who Audits the Auditors? Recommendations from a field scan of the algorithmic auditing ecosystem. Fortunately, it's a very readable paper, with a wealth of information, and an excellent reference list. Kudos to the authors, and to the Algorithmic Justice League for taking on projects like this.

And if you don't have time right now to read the paper, no worries ... the authors have a short video as well!